For the past six months, I’ve been interning with Last.fm’s data team and conducting research for my bachelor’s dissertation. My interest lay in working with Big Data: learning more about data analysis, processing massive amounts of data and solving problems that arise from working with data sets that are too large to be stored on one computer. And what better way to do this than to leave the cosy academic world of my university, move abroad and to write my final assignment on a new field in a foreign city, right?

Let me give you a personal account of the work involved for my research on Efficient Record Linkage with MapReduce in Very Large Data Sets.

Y U NO START WITH EXAMPLE?

Say for some reason you are given two sheets of paper with customer data. The first one contains the customers’ names and addresses while the second one lists their names and the results of a recent survey on their favourite colour. Your task is to connect all customers with their favourite colour.

You start with the first line on the one sheet, look at the name and try to find what this customer answered as their favourite colour on the other sheet. You continue doing this until you reach the end of the list.

While doing this customer record matching you notice a few things. Some customers have listed their favourite colour more than once, sometimes it is the same colour but sometimes it is not. The address data also is far from perfect; there are customers with similar names that all live at the same address. Conversely, one customer with a very unusual name seems to own several houses in the same street. These problem cases demand that you decide whether the same person is meant or not. If so, you pick one address and colour and write down each connection just once.

Now imagine this matching thing becomes a regular task that you have to complete at the end of each week. Oh, and the size of your customer data has also magically increased; it does not fit on two sheets of paper anymore but suddenly takes up billions of them. In this case it is time to invite your friends over to help you with your matching task.

You will have to agree on how you handle duplicate customer records and also distribute the work equally. Then, how do you even determine all the matches in these vast amounts of paper? I mean, hanging out with friends is excellent but going through many pages looking for all occurrences of just one customer quickly becomes dull, as most comparisons will be made unnecessarily. You will have to think of a way to minimise work.

“Unnecessary” quotes

This example illustrates some of the issues we face daily at Last.fm. We often have to integrate data that we received from our partners into our own music catalogue.

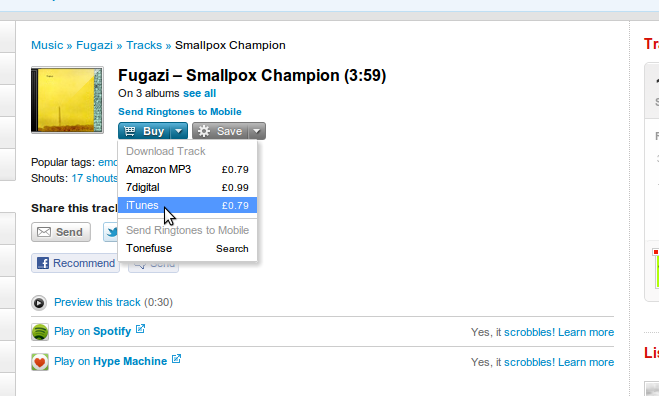

For example, in order to provide those sweet links to Spotify, Hype Machine, Amazon and iTunes on track pages we have to find corresponding entries that relate to similar artists, tracks, or albums in two or more data sets. Generally, this task is known as record linkage, which is a very active research field.

The specific question posed for my dissertation was: “What approaches are there that we can use to improve our data matching tasks at Last.fm?” My findings and conclusions will be used in the future to do this.

I first compiled a list of promising and interesting techniques and evaluated them in a small scale. These included approaches for pre-grouping entries that share a certain similarity in an efficient manner to later minimise the number of comparisons that need to be made (for example, an inverted index and a spatial index) and several metrics that can state how similar two entries are (for instance, metrics introduced by Levenshtein and Dice, but also approaches that first map strings to vectors and then measure the enclosed angle). This allowed me to come up with three combinations of techniques that performed best for our kind of data.

Still, the problem of scale remained, as working with large files and data sets introduces another layer of complexity to the initial problem of matching data. In recent years, MapReduce has become the number one choice for working with Big Data. One reason for its success is that MapReduce makes it very convenient to distribute data processing over a number of computers. Instead of having one computer doing all computations one after the other, many computers can work on small tasks at the same time, and the combined efforts generate a final result. The most commonly used implementation of MapReduce is Hadoop.

MapReduce removes a lot of work for the programmer (for example, writing code that distributes work, collects results and reacts to failures), however it also demands that a problem must be expressible within the constraints that MapReduce introduces. These make it necessary to investigate if MapReduce really is the right tool for a given task. For example, techniques that worked well with small amounts of data might suddenly not perform as before when the size of the data is scaled to a certain size.

For the adaptations of the previously identified combinations to MapReduce, I switched to the Cascading framework. As mentioned, when you develop a program for MapReduce you will have to “think” in its programming model, which can sometimes be a painful and slow process. Cascading, however, abstracts the underlying MapReduce model using workflows and allows one to write very complex distributed programs in shorter time. We have been using Cascading extensively in the data team and we love it.

In brief, my findings were that you shouldn’t rely on MapReduce alone for data matching, as the record linkage process is difficult to map in whole to the MapReduce model. For example, the biggest performance bottleneck was the sorting and distributing of entries prior to making the comparisons — the step that is supposed to speed up the matching by pre-grouping entries with a certain similarity. I concluded that it is better to introduce another system for storing intermediary results (for instance, a distributed key-value store like memcached) or to evaluate other approaches that I didn’t have time to cover in depth.

Gain experience points, spend them all on coffee.

Last.fm is a dedicated bunch of people and it was great to learn from them how to tackle a problem properly. This environment quickly drew me in and motivated me. I remember sitting at my desk during my first afternoon and thinking: “This is exactly what I have been looking for.” I was trusted with steering my research in the right direction. I was in total control of my decisions, and could freely experiment and make mistakes (on one occasion one of my experiments on the Hadoop cluster went berserk and managed to hog terabytes of storage space), and everyone on my team supported me as much as possible; they were always approachable, no matter how busy they were.

I enjoyed every day although it was, of course, much more work than I had expected; I was fine-tuning my dissertation and polishing my paragraphs right up until delivering it to the printers. Then, on Friday about one month ago I finally submitted it to my university.

What else do I take with me from six exciting months in London? I went to lots of great gigs and consumed vast quantities of excellent coffee. I was introduced to many people and ideas, had countless interesting conversations and got a good introduction to the local tech scene.

I must also have made a valuable contribution to my team. That is why my stay in the data team has been extended for a couple of months (“at least”, to quote a colleague). After all, there’s just so much more to learn.

Comments

rebeka

8 November, 15:44

thnks Stefan blogs best.

Loki-Afro

8 November, 17:37

Hey, I’m a Java programmer and that sounds realy nice to me! Someday i’ll work at last.fm too :P

Tims

9 November, 18:27

Awesome post Stefan. U Rck.

MXMLLN

10 November, 02:06

¿Are all Last.FM blogs for developers? Luckily, I’m also a programming student…

Fırsat Scripti

21 November, 03:54

thx Stephan, good blog

Don

21 November, 10:11

The era of big data is upon us. Over the past 20 years, we have seen a huge increase in the amount of data that the world produces.

Blitz Review

23 November, 12:49

Stephan, this seems to be one of the cruel realities if you have a lot of clients, everyone with its ideas.

Andy Cotgreave

24 November, 10:05

Great post: well written and a great insight into your work at last.fm. Good luck for the future!

Comments are closed for this entry.